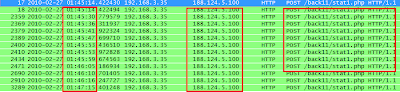

Virus Bulletin 2013 , one of the top computer security conferences was held this year in Berlin , Germany from 2nd - 4th October 2013 , and I was invited to speak on one of the research that I did on Behavioural method to detect HTTP botnets. Detection method precisely focuses on couple of key areas :

- How do we differentiate traffic generated by automated clients from the human initiated traffic.

- How do we examine and differentiate outbound HTTP traffic generated from the legitimate sources like web browsers , from the malicious botnet Command and Control traffic over HTTP protocol

- Monitoring of the idle host based on the volume of the traffic generated and determining its suspiciousness on the basis of repetitive connections to C&C server.

This was one of the very few presentations audience really found interesting . Although it was on the final day of the conference and the audience was relatively less then previous two days which was kind of little disappointing . 3rd Oct evening was the fantastic drinks party followed by a gala dinner and sensational German dance performances in the evening ended the day. So lesser audience on the last day of the conference wasn't too surprising to me.

On the other side , several presentations on the technical stream was interesting . One of them that got my attention was a talk from Microsoft guys , revealing the major problem that the AV industry could have started facing today . The presentation was about attacks on the AV Automation systems itself. Today , industry heavily rely on telemetry data and sample sharing between the vendors to be able to quickly respond to the 0 day threats. AV automation systems are primarily builded to auto-classify lakhs of malware samples received everyday and generate automatic signatures . Attackers have now started to probe these automation systems to find the loopholes in automatic signature generation and exploit them by injecting the specifically crafted clean files into the telemetry system and poisoning them . Imagine the mess that it can cause due to significant volume of such crafted files received via telemetry.

Entire presentation from Microsoft speakers can be viewed here : Working together to defeat attacks against AV automation

One of the other presentations that I found interesting was from F-secure titled "Statistically effective protection against APT attacks " talking about the research that they did on several available exploit mitigation methods and which one is most effective in preventing exploits from executing shellcode . Research talks about how effective are the mitigation methods like Application sandboxing , Client application hardening , Memory handling mechanisms for exploit prevention and Network hardening and which one is most effective against some of the in the wild exploits . Kind of useful research .

Overall , it was a fantastic conference and got the opportunity to socialize and meet lot of people out there sharing ideas and talking about lot of stuff ..

All the slides of VB 2013 presentations are available here .

Entire presentation from Microsoft speakers can be viewed here : Working together to defeat attacks against AV automation

One of the other presentations that I found interesting was from F-secure titled "Statistically effective protection against APT attacks " talking about the research that they did on several available exploit mitigation methods and which one is most effective in preventing exploits from executing shellcode . Research talks about how effective are the mitigation methods like Application sandboxing , Client application hardening , Memory handling mechanisms for exploit prevention and Network hardening and which one is most effective against some of the in the wild exploits . Kind of useful research .

Overall , it was a fantastic conference and got the opportunity to socialize and meet lot of people out there sharing ideas and talking about lot of stuff ..

All the slides of VB 2013 presentations are available here .