HTTP has been predominantly used by recent botnets and APTs as their primary channel of communication with the Command and Conrol servers . This number has significantly shown increase in the last few quarters. One of the research shows that more than 60% of the botnets use HTTP protocol to achieve C&C communication and the number has kept increasing. Below distribution shows the popularity and dominance of HTTP protocol among the top botnet families.

Couple of apparent reasons to use HTTP as a primary channel for C&C is that it cannnot be blocked on the network since it carries major chunk of the internet traffic today . Secondly , it is not hard , but nearly impossible to differentiate the legitimate HTTP traffic from the malicious one on the network perimeter unless you have the known signatures for it. This makes HTTP even more popular among malware authors.

Industry is well aware of the fact that traditional signature based approaches are no longer a solution to today's sophistication level of the threats and limitations with this has driven the shift of focus from signatures to behaviour. But we need to answer the question : What are those suspicious behaviours we should look for on the network ?

Before we answer that question , I'd like to throw some light on the typical lifecycle of botnet command and control over HTTP.

- Botnets would typically connect to a small number of C&C domains . It may try to resolve too many domains over the short period of time when it does DGA kind of stuff , but once it successfully resolves a domain and connects, it will stay connected to the same domain for its lifetime

- Once connected , it will send either HTTP GET / POST request to the specific resource ( URI ) of the C&C server as the registration / phone home communication.

- It will execute the command received from the C&C server OR will either sleep for the fixed interval of time before connecting back again and pull the command from C&C.

- Subsequently, it will connect to the server at fixed / stealth intervals and will keep pulling commands or might send keepalive messages to announce its existance periodically.

|

| Zeus C&C communication over the network |

We realize that machine infected with botnet communicating with the control server periodically is the automated traffic . Since this behaviour can also be exhibited from the legitimated software and websites, another questions comes up here : How do we differentiate browser / human initiated traffic from the automated traffic ? Certain facts that we can definitely rely on :

- It is abnormal for most users to connect to a specific server resource repeatedly and at periodic intervals. There might be dynamic web pages that periodically refresh content, but these legitimate behaviors can be detected by looking the server responses.

- The first connection to any web server will always have response greater than 1KB because these are web pages. A response size of just 100 or 200 bytes is hard to imagine under usual conditions.

- Legitimate web pages will always have embedded images, JavaScript, tags, links to several other domains, links to several file paths on the same domain, etc. These marks the characteristics of the normal web pages.

- Browsers will send the full HTTP headers in the request unless it is intercepted by MiTM tools that can modify / delete headers.

All of the above facts allows us to think about the specific behaviour we can look on the network : Repetitive connections to the same server resource over HTTP protocol

Assume that we choose to monitor a machine under idle conditions–when the user is not logged on the machine–we can distinguish botnet activity with a high level of accuracy. We think about monitoring the idle host because that's the period where the traffic volume is less. It is kind of relatively easy to identify idle host due to the nature of the traffic that it generates ( usually version updates , version checks , keepalives etc ..). We 'd never expect the idle host to generate traffic to yahoo.com or hotmail.com.

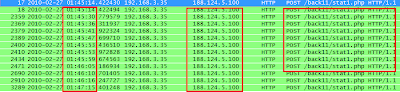

Under these conditions , if the machine is infected with Spyeye botnet , traffic will look like this:

Notice that Zeus ( in the previous screenshot ) / Sypeye connects to one control domain and keeps sending HTTP POST every 6 and 31 seconds respectively to a specific server resource. Algorithmically, while idle, we’d deem a host's activity suspicious when :

- The number of unique domains a system connects to is less than a certain threshold

- The number of unique URIs a system connects to is less than a certain threshold

- For each unique domain, the number of times a URI is repetitively connected to is greater than a certain threshold

Assuming the volume of traffic from the host is less, If we take the preceding conditions in a window of say two hours, we might come up with following:

- Number of unique domains = 1 (less than the threshold)

- Number of unique URIs connected = 1 (less than the threshold)

- For each unique domain, the number of times a unique URI is repetitively connected to = 13 (greater than threshold)

The approach however, does not mandates that repetitive activity should be seen at these fixed intervals. If we choose to monitor within a larger window, we could detect more stealthy activities. The following flowchart represents a possible sequence of operations.

The first few checks are important to determine whether the host isn't talking too much. First, Total URI > threshold determines that we have enough traffic to look into. Next, Total Domain access >/= Y determines that the number of domains accessed is not too large. The final check is to see if Total unique URIs < Z. The source ends up on the suspicious list if we believe it has generated repetitive connections.

For instance, if the Total URIs = 30, Total Domain access = 3, and Total Unique URI accessed = 5, we guarantee a repetitive URI access from the host. Now if the number of repetitive accesses to any particular URI crosses the threshold (for example, 1 URI accessed 15 times within a window), we can further examine the connection and apply some of heuristics to increase our confidence level and eliminate false positives. Some heuristics we can apply:

- Minimal HTTP headers sent in the request

- Absence of UA/referrer headers

- Small server responses and lacking structure of usual web page

- Domain registration time and perhaps reputation as well.

I implemented the proof of concept for this approach and I could detect the repetitive activity with relative ease.

Applying this method over several top botnet families exhibiting similar behaviour , I could detect them with medium to high level of confidence.

Behavioral detection methods will be the key to detecting next-generation threats. Given the complexity and sophistication of the recent advanced attacks, such detection approaches can address threats proactively–without waiting for signature updates–and will prove to be much faster.

No comments:

Post a Comment